Could AI replace SOC Analysts?

Could AI Agents replace SOC Analysts in the future? Learn why those who say "no" might be in the wrong!

This topic has been doing rounds in the cybersecurity sphere for some time now ever since ChatGPT was launched a couple years ago. Could we replace SOC Analysts with an AI-based solution? Is this just another hype-ridden topic or something that we should actually think about a bit further.

A little bit about me. I have both worked as a SOC Analyst and trained upcoming analysts for the role, mostly focused on Microsoft Sentinel and Cloud environments. Also worked quite a bit under the hood, developing analytics rules and operations (the O in SOC😉).

What do SOC Analysts do?

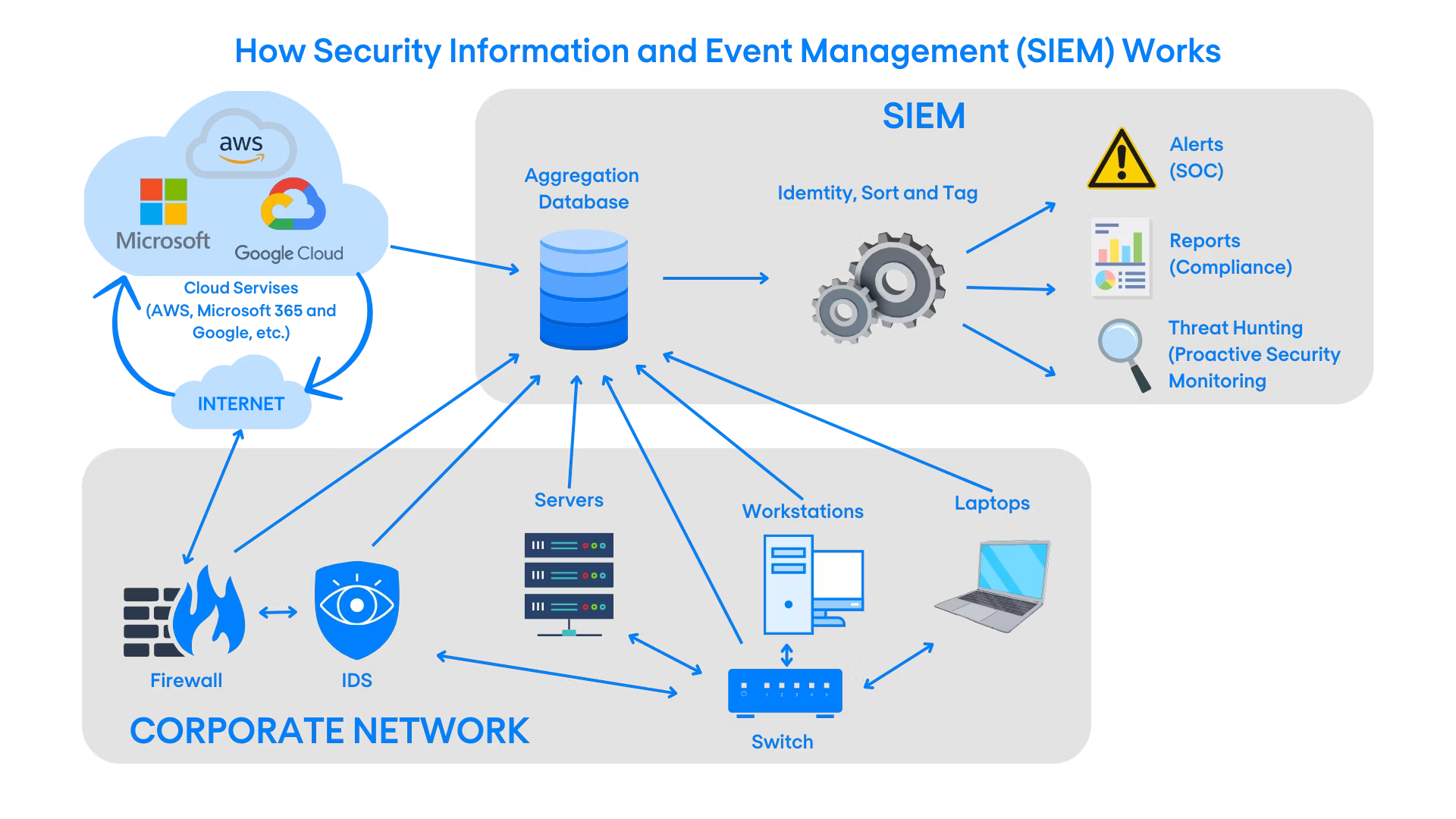

So what do SOC analysts do? They look for alerts (or "incidents" as they're sometimes called) from a central system and react based on them. The system in question is a SIEM (Security Incident & Event Management) system, where logs from IT systems are dumped and processed, and on which the system brings up alerts, usually based on rules.

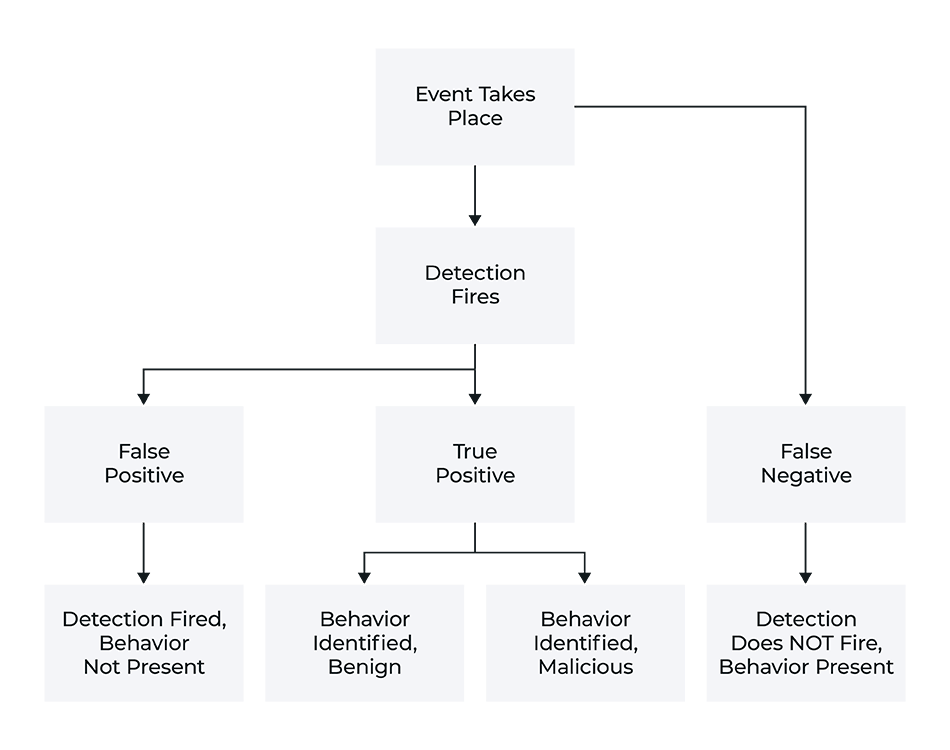

What a SOC analyst is usually concerned with (in the Tier 1 level, where most newcomers are), is just a simple question: "Is this alert a false positive, or something that should be investigated further?". This on it's own is the job of Tier 1, where they just need to categorize alerts based on reading the logs they're based on. This sounds like a simple job, but actually requires at least some level of proficiency in the systems you are monitoring. You have to know, how the system works in order to notice that something's wrong.

The usual conclusion

What makes the SOC a big deal for organizations is it's operating model. SOC's usually operate 24/7, since "the bad guys don't work within office hours". What this means from an operations standpoint is that you need a lot of people that work in shifts at each tier of the SOC. And that means money.

This seems like a prime opportunity for anyone who's not all that familiar with the field to say: "Hey, we can replace that with AI right now!", but when you look at the issue closer, it becomes much more sophisticated than that.

The devil is in the details

The reason why all of this hasn't been automated yet is the type of data we deal with in the SOC. It's because the difference between a clear, but benign false positive and a critical true positive case is in many cases very small. Many times, this distinction skill is something that's only built up with time and practice. You will only know a critical true positive case when you've seen 100 benign false positives, and learned to notice the slight difference.

And this is why when you go to Google to gauge the usual market sentiment on the question "Will AI replace SOC Analysts?", the answer you come back with is: "No, because it's too difficult and there are too many variables you cannot trust AI with.". They could be right at least in some facets, but the question goes deeper than that. Also, keep in mind that most of the parties coming out with these statements are SOC providers, or classic EDR (Endpoint Detection & Response) providers, who do have skin in the game so to say.

Let's make bad slightly better

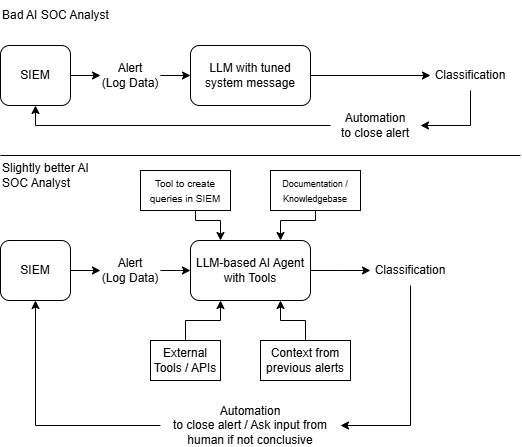

What I think has been the issue around this question is that people are looking at it in a way that's not realistic. They conduct a test where they copy-paste 10 rows of log data to ChatGPT and ask it "tell me if this is either a false positive or a true positive", and scream when it fails to classify properly. But if we take a more AI Agent -based approach, I think you could build quite a good solution.

What is the main difference between human analysts and an AI? One thing is context. The human has context of where the alert is coming from, what has happened before that moment and what kind of alerts usually come out of the SIEM. The AI Agent should also have this context to use, since it's crucial to the classification process. The human analyst will also often use external tools like Virustotal to check if URL's or IP addresses are labeled as malicious. Sometimes these tools are already bolted onto SIEM systems through automations. The AI Agent should also be able to use external services as it's aid.

If we equip the AI to work in an agent-based workflow by itself, where it can utilize external tools and receive context and documentation, it could reason over the evidence and make additional queries into the SIEM to investigate further. It would go through all these steps and reason over the question before making it's classification. If the AI Agent thinks it's not totally sure, the classification will be forwarded to a human analyst, who can do error checking.

What could go wrong?

This kind of architecture of course brings up some possible issues:

- How big of a context window will we need? Especially if we tag along a lot of log entries to the LLM, the context window could limit us, especially with newer reasoning models.

- LLMs are not yet the best at understanding tabular data, rather preferring unstructured data. Luckily this can be tackled with processing data as JSON in many cases.

- What is an acceptable level of "conclusive"? Will the AI be even able to recognize when it is not sure of something, or will it just push forward confident as ever?

What does this mean in the long run?

What I am trying to make a case for here is that we should not ignore the possibilities AI Agents bring to the security field, no matter how much we are scared for our jobs or the jobs of our SOC Analysts. With the help of AI, SOC Analysts could essentially be promoted to a more senior position, giving their input when it is actually needed, and not numbing their brains on thousands of benign positive cases in the late (and expensive) hours during the nights.

Next steps

So, now that's all left to do is to build that AI Agent, and see if it actually works! That's something I'll surely be looking into, and I will update in this blog when I get some results!